------------------------------------------------------------------------------------

Software Development Life Cycle (SDLC) Vs Software Test Life Cycle (STLC)

------------------------------------------------------------------------------------

STLC (Software Testing Life Cycle)

SOFTWARE TESTIGN LIFE CYCLE:

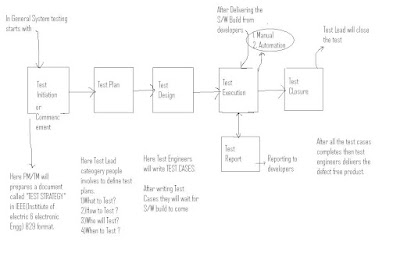

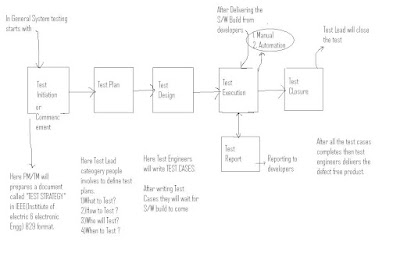

1) TEST INITIATION:

In General, System testing process or STLC starts with test initiation or test commencement. In this phase, project manager or Test Manager selects the reasonable approaches which are followed by Test Engineers. He prepares a document called TEST STRATEGY DOCUMENT in IEEE 829 Format.

TEST STRATEGY document is developed for each project. This document defines the scope and general directions or approach or path for testing in the project.

TEST STRATEGY MUST ANSWER THE FOLLOWING:

1. When will testing occur?

2. What kind of testing occur?

3. What kinds of risks come?

4. What are the critical success factors?

5. What is the testing objective?

6. What tools will be used?

TEST STRATEGY DOCUMENT FEATURES ARE AS FOLLOWS:

1) SCOPE & OBJECTIVE:Brief account & purpose of the project.

2) BUDGET ISSUES: Allocated budget for the project

3) TEST APPROACH: Defines test approach between development stages & testing factors.

4) TEST ENVIRONMENT SPECIFICATIONS:

Require test documents developed by testing team during testing.

5) ROLES & RESPONSIBILITIES: The consecutive jobs in both development & testing and their responsibility.

6) COMMUNICATION & STATUS REPORTING: Required negotiation in between two consecutive roles development & Testing.

7) TESTING MEASUREMENTS & METRICS: To estimate the work completion in terms of quality assessment, test management process capabilities.

8) TEST AUTOMATIONPossibilities to get test automation with respect to corresponding project requirements & testing facilities (or) tools availability.

9) DEFECT TRACKING SYSTEM: Required negotiation in between development and testing team to fix the defects and resolve.

10) CHANGE & CONFIGURATION MANAGEMENT: Required strategy to handle change request of user’s side.

11) RISK ANALYSIS & MITIGATIONS or Solutions:

Common problems appears during testing and possible solutions to recover.

12)TRAINING PLAN: The required number of training sessions for test engineers before starting of project.

NOTE: RISKS ARE FUTURE UNCERTAIN OR UNEXPECTED EVENTS WITH A PROBABILITY OF OCCURRENCE AND POTENTIAL FOR LOSS.

2) TEST PLAN:After completion of the test strategy document, the test lead category people defines test plan in terms of

WHAT TO TEST? (Development Plan)

HOW TO TEST? (From SRS document)

WHEN TO TEST? (Design document/Tentative Plan)

WHO WILL TEST? (Team Foundation)

Converting of System plan to Module plan:

SYSTEM PLAN -- MODULE PLAN

Development Plan -> Team Foundation

Test Strategy ->Risk Analysis

->Prepare test plan document

->Review on test.

TEST TEAM FORMATION:

In General, the test planning process starts with test team formation depends upon the below factors:

Availability of testers

Availability of the test environment resources.

Identifying Tactical Risk.

After completion of test team formation the test lead concentrate on risk analysis and mitigations or solutions.

Types of Risks:

1. Lack of knowledge on the domain.

2. Lack of budget.

3. Lack of resources.

4. Delay in deliveries.

5. Lack of development team seriousness.

6. Lack of communication.

Prepare Test Plan Document:-After completion of test formation and risk analysis, the test lead concentrate on test plan document in IEEE format as follows:-

TEST PLAN ID:The unique name and number will be assigned for every test plan.

INTRODUCTION/SUMMARY:Brief account about the project.

TEST ITEMS:Number of modules in the project.

FEATURES TO BE TESTED:Testing only responsible modules (or) the names of the modules to be tested.

TEST ENVIRONMENT:

Required documents to prepare during testing & required H/W & S/W used in the project.

NOTE: ABOVE ALL THE POINTS FOR “WHAT TO TEST”

ENTRY CRITERIA:Whenever the test engineers, starts test execution.

i. All test cases are completed

ii. Receive stable build or software build from developer

iii. Establish test environment.

SUSPENSION CRITERIA:Whenever the test engineers are able to interrupt test execution

i. Major bugs or severe bugs occur.

ii. Resources are not working.

EXIT CRITERIA:Whenever the test engineers are able to stop test execution

i. All the test cases should be completed.

ii. Cross the schedule.

iii. All the defects resolved.

TEST DELIVERABLES:The number of required documents submitted to the test lead by the test engineers.

Documents are – 1.Test Case documents.

2. Test Summary documents.

3. Test Log.

4. Defect report documents.

5. Defect summary documents.

NOTE: The above mentioned points for HOW TO TEST?

ROLES & RESPONSIBILITIES:The work allocation to the selected test engineers and their responsibilities.

STAFF & TRAINING PLAN:The names of selected testing team and number of training sessions required for them.

NOTE: The above two points for WHO WILL TEST?

SCHEDULE:

The date and time allocated for project.

APPROVALS:Signature of PM/QA/responsible people.

NOTE: The above two points for WHEN TO TEST?

REVIEW ON TEST PLAN:After completion of test plan document, the test lead concentrate on review of the document for completeness & correctness.

In this review meeting, testing team conducts Coverage Analysis.

3) TEST DESIGN PHASEAfter preparing the test plan document. The test engineers who are selected by Team leaders will concentrate to prepare Test Cases in IEEE 829 format.

TEST CASE:A set of inputs, execution conditions and expected results developed for a particular objective or test object such as to exercise a particular program path (or) To verify complaints with a specific requirement.

The test case is not a necessarily design to expose a defect, but to gain a knowledge or information.

Eg) Whether the program PASS/FAIL the test.

WHY TEST CASE REQUIRED?Necessary to verify successful and acceptable implementation of product requirement.

Helps to finds the problems in the requirements of an application.

Determines whether we reached clients expectations.

Helps testers SHIP (valid) or NO SHIP (Invalid) decisions.

1. Randomly selecting or writing test cases doesn’t indicate effective of testing.

2. Writing large number of test cases doesn’t mean that many errors in the system would be uncover.

The Test engineers are writing test cases in 2-methods:

1) USER INTERFACE BASED TEST CASE DESIGN.

2) FUNCTIONAL & SYSTEM BASED TEST CASE DESIGN.

USER INTERFACE BASED TEST CASE DESIGN:A test case in applications development is a set of conditions or variables under which a tester will determine whether an application or software system is working correctly or not. All the below test cases are static, because the test cases are applicable on build without operating.

TEST CASE TITLES:1. Check the spellings.

2. Check font uniqueness in every screen.

3. Check style uniqueness in every screen.

4. Check label uniqueness in every screen.

5. Check color contrast in every screen.

6. Check alignments of objects in every screen.

7. Check name uniqueness in every screen.

8. Check spacing uniqueness in between labels and objects.

9. Check dependent object grouping.

10. Check border of object group.

11. Check tool tips of icons in all screens.

12. Check abbreviations (or) full forms.

13. Check multiple data object positions in all screens. Eg) List Box, Menu, Tables.

14. Check Scroll bars in every screen.

15. Check short cut keys in keyboard to operate on our build.

16. Check visibility of all icons in every screen.

17. Check help documents. (Manual support testing)

18. Check Identity controls. Eg) Title of software, Version of S/w, Logo of company, Copy Rights etc.

NOTE:Above usability test cases are applicable on any GUI application for usability test.

For these above test cases, Testers give priority as “P2”

Test Case PrioritizationDifferent organizations use different scales for prioritization of test cases. One of the most commonly used scale prioritizes the Test Scenarios into the following 4 levels of Priorities.

1. BVT

2. P1

3. P2

4. P3

BVT: (Build Verification Test)

Test scenario marked as BVT verifies the core functionality of the component. If BVTs fails, it may block the further testing for the component hence it is suggested not to release the Build. To certify the build all BVTs should execute successfully.

E.g. Installation of Build.

P1 (Priority 1 Test)

Test scenario marked as P1, verifies the extended functionality of the component. It verifies most of the functionalities of components. To certify the interim release of build all BVTs and majority of P1 should execute successfully.

E.g. Installation of Build with different flavors of Database (MSDE or SQL)

P2 (Priority 2 Test)

Test scenario marked as P2, verifies the extended functionality with lower priority of the components. It verifies most of the functionalities of components. To certify the release of build all BVTs and majority of P1 & P2 should execute successfully.

E.g. Validation, UI testing and logging, etc.

P3 (Priority 3 Test)

Test scenario marked as P3, verifies error handling, clean up, logging, help, usage, documentation error, spelling mistakes, etc for the components.

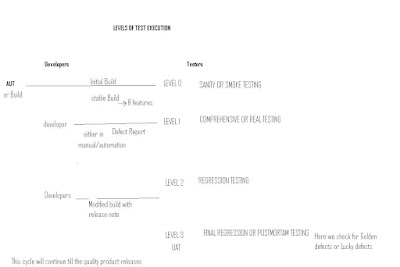

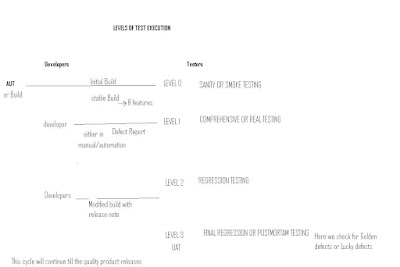

4)TEST EXECUTION After completion of test case preparation, the test engineers will concentrate on Levels of testing.

LEVEL 0 -SANITY TESTING

Practically the test execution process is starting with sanity testing to estimate Stability of the Build. In this, Sanity testing testers are concentrating on below factors through the coverage of basic functionalities in that build.

1. UNDERSTANDABLE

2. OPERATABLE

3. OBSERVABLE

4. CONTROLABLE

5. CONSISTENCY

6. SIMPLICITY

7. MAINTAINABLE

8. AUTOMATABLE

The above Level 0 Sanity testing is estimating testability of the build. This level-0 testing is also known as SANITY TESTING (or) SMOKE TESTING (or) TESTABILITY TESTING (or) BUILD ACCEPTANCE TESTING (or) BUILD VERIFICATION TESTING (or) TESTER ACCEPTANCE TESTING (or) OCTANGLE TESTING

LEVEL 1 – COMPREHENSIVE TESTING (or) REAL TESTING

After completion of Level 0(Sanity testing), Testers are conducting Level 1 Real testing to detect defects in the build.

In this Level, Testers are executing all the test cases either in Manual or Automation as “TEST BATCHES”

Every TEST BATCH consists set of dependent test cases. These Test Batches also known as TEST SUIT or TEST CHAIN or TEST BELT.

In these Test Execution as batches on the build, Testers are preparing “TEST LOG” document with 3-types of entries:

1. PASS (Testers expected values is equal to actual)

2. FAIL (Testers expected value is not equal to actual)

3. BLOCKED (Postponed due to lack of time)

LEVEL 2 – REGRESSION TESTING

During Level 1 (Real or Comprehensive testing),

Testers are reporting mismatches in between our test expected values and build actual values as defect report.

After receiving defect reports from testers, the developers are conducting a review meeting to fix defects.

If our defects accepted by developers, then they are performing changes in coding and then they will release Modified build with Release note. The release note of a modified build is describe the changes in that modified build to resolve reported defects.

Testers are going to plan regression testing to be conducted on that modified build with respect to release note.

APPROACH:

Receive modified build along with release note from developers.

Apply sanity testing on that modified build.

Run that select test cases on modified build to ensure correctness of modification without having side effects in that build.

Side Effects Effecting other functionalities.

After completion of the required level of regression testing, the testers conducting remaining Level 1 test execution.

LEVEL 3 – FINAL REGRESSION

After completion of entire testing process testing team concentrate on Level 3 test execution.

This level of testing is also known as POST MORTEM TESTING (or) FINAL REGRESSION TESTING (or) PRE-ACCEPTANCE TESTING.

During this if they get any defects then it is called as GOLDEN DEFECT (or) LUCKY DEFECT.

After resolving all golden defects they will concentrate on UAT along with developers.

The act of running a test, observing the behavioral of the application and its environment is called TEST EXECUTION.

This is also referred to the sequence of the actions performed to be able to say that Test has been completed.

TEST AUTOMATION EXECUTION – S/w or tool executes all the test cases in the form of test script on the application.

5.TEST REPORT During Level 1 & Level 2, if tester finds any mismatches between their expected and defect report in IEEE format.

There are 3-ways to submit defect to developer

1. CLASSICAL BUG REPORTING PROCESS.

DRAWBACKS:

• Time Consuming.

• No Transparency

• Redundancy.(Repeating)

• No Security(Hackers may hack the mails)

NOTE:

TE : TEST ENGINEERS

D : DEVELOPERS

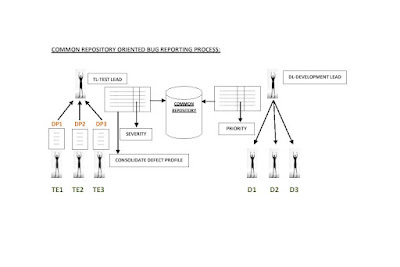

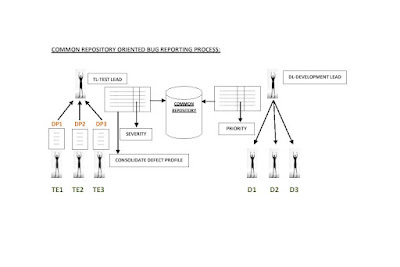

2. COMMON REPOSITORY ORIENTED BUG REPORTING PROCESS.

COMMON REPOSITORY : It is a server which can allow only authorized people to upload and download.

DRAWBACKS:

• Time Consuming.

• No Transparency

• Redundancy.(Repeating)

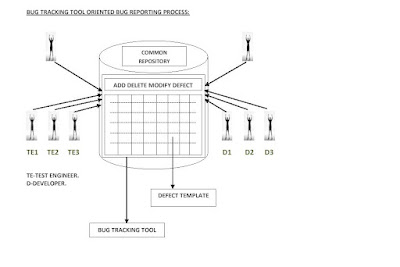

3. BUG TRACKING TOOL ORIENTED BUG REPORTING PROCESS.

BUG TRACKING TOOL: It is a software application which can be access only by the authorized people and provides all the facilities for bug tracking and reporting.

Ex: BUGZILLA, ISSUE TRACKER, PR TRACKER (PR PERFORMANCE REPORTER)

No Transparency: Test engineer can’t see what was happening in the development deportment and developer can’t look what was the process is going in the testing deportment.

Redundancy: There is a chance that some defect will be found by all the test engineers.

Ex: Suppose all the test engineers found the defect of the login screen login button, and then they raise the same as a defect.

BUG TRACKING TOOL ORIENTED BUG REPORTING PROCESS:

The test engineer enter into bug tracking tool, he add defect to the template with add defect feature and writes the defect in corresponding columns, the test lead parallely observes it by bug tracking tool, and he assign Severity.

The development lead also enters into the bug tracking tool. He assigns the priority and assigns the task to the developer. The developer enters into the tool and understands the defect and rectifies it.

Tool: Something that is used to complete the work easily and perfectly.

Note: Some companies’ use their own Bug Tracking Tool, this tool is developed by their own language, this tool is Called ‘INHOUSE TOOLS’.

6. TEST CLOSURE ACTIVITY: This is the final activity in the testing process done by the test lead, where he will prepare the test summary report, which contains the information like:

Number of cycles of execution,

Number of test cases executed in each cycle, Number of defects found in each cycle, Defect ratio and…………etc.

DEFECT LIFE CYCLE  DEFECT LIFE CYCLE

DEFECT LIFE CYCLE  Defect Severity

Defect Severity is classified into four types:

FATAL : Unavailability of functionality /navigational problems

MAJOR : defects in Major functionalities

MEDIUM : The functionality is not severe but it should be resolved

MINOR : If the defects are related to LOOK AND FEEL then it’s a MINOR.

These defects have to be rectified.

Generally it is given by senior test engineers and test lead and can be changed by Project mangers or lead people according to his convenience depending on the situation.

STATUS:The status of defect:

NEW – Intiallay when the identified by the test engineer for the first time, then he sets the status as NEW

OPEN – When the developer accepts defect, then he sets the status as OPEN

RE-OPEN – If the test engineer feels that the raised or rised defect is not rectified properly by the developer, then tester sets status as RE-OPEN

CLOSE – when the TE feels that the rised defect is resolved, then he sets as CLOSE

FIXED –Whenever the rised defect is accepted and rectified by developer.

HOLD – When ever the developer is in dilemma to accept or reject the defect.

AS PER AS DESIGN – Whenever a new changes incorporated by developer into the build and tester is not aware of that. In that case the tester will raise/rise the defect.

TESTERS ERROR – If the developer feels that it is not at all defect, due to incorrect process of tester, then developers set status as TESTERs ERROR

REJECT- Developers require more clarity.

FIX-BY/DATE/BUILD: The developer who has fixed the defect, on which date and build no will be mentioned here in this section.

DATE CLOSURE: The date on which the defect is rectified will be mentioned here in this section.

DEFECT AGE: The time gap between “Reported on” and “Resolved On”.

TYPES OF DEFECTS 1. User Interface Bugs: SUGGESTIONS

Ex 1: Spelling Mistake → High Priority

Ex 2: Improper alignment → Low Priority

2. Boundary Related Bugs: MINOR

Ex 1: Does not allow valid type → High Priority

Ex 2: Allows invalid type also → Low Priority

3. Error Handling Bugs: MINOR

Ex 1: Does not providing error massage window → High Priority

Ex 2: Improper meaning of error massages → Low Priority

4. Calculation Bugs: MAJOR

Ex 1: Final output is wrong → Low Priority

Ex 2: Dependent results are wrong → High Priority

5. Race Condition Bugs: MAJOR

Ex 1: Dead Lock → High Priority

Ex 2: Improper order of services → Low Priority

6. Load Condition Bugs: MAJOR

Ex 1: Does not allow multiple users to operate → High Priority

Ex 2: Does not allow customer expected load → Low Priority

7. Hardware Bugs: MAJOR

Ex 1: Does not handle device → High Priority

Ex 2: Wrong output from device → Low Priority

8. ID Control Bugs: MINOR

Ex: Logo missing, wrong logo, version no mistake, copyright window missing, developers name missing, tester names missing.

9. Version Control Bugs: MINOR

Ex: Difference between two consecutive build versions.

10. Source Bugs: MINOR

Ex: Mistakes in help documents.

WAYS OF TESTING:There are 2 ways of Testing:

1. MANUAL TESTING

2. AUTOMATION TESTING

1. MANUAL TESTING: Manual Testing is a process, in which all the phases of STLC (Software Testing Life Cycle) like Test planning, Test development, Test execution, Result analysis, Bug tracking and Reporting are accomplished successfully and manually with Human efforts.

DRAWBACKS:

1. More no of human resources are required.

2. Time consuming.

3. Less accuracy.

4. Tiredness.

5. Simultaneous actions are almost impossible.

6. Repeating the same task again and again in same fashion is almost impossible.

2. AUTOMATION TESTING: Automation Testing is a process, in which all the drawbacks of Manual Testing are addressed properly and provides speed and accuracy to the existing testing process.

DRAWBACKS:

1. Automated tools are expensive.

2. All the areas of the application can’t be tested successfully with the automated tools.

3. Lack of automation Testing experts.

Note: Automation Testing is not a replacement for Manual Testing; it is just continuation for Manual Testing.

Note: Automation Testing is recommended to be implemented only after the application has come to a stable stage.

TERMINOLOGY:

DEFECT PRODUCT: If at all the product is not satisfying some of the requirements, but still it is useful, than such type of products are known as Defect Products.

DEFECTIVE PRODUCT: If at all the product is not satisfying some of the requirements, as well as it is not usable, than such type of products are known as Defective Products.

QUALITY ASSURANCE: It is a dependent, which checks each and every role in the organization, in order to confirm whether they are working according to the company process guidelines or not.

QUALITY CONTROL: It is a department, which checks the develop products or its related parts are working according to the requirements or nt.

NCR: If the role is not following the process, the penalty given for him is known as NCR (Non Conformances Raised). Ex: IT-NCR, NON IT-MEMO.

INSPECTION: It is a process of sudden checking conducted on the roles (or) department, without any prior intimation.

AUDIT: It is a process of checking, conducted on the roles (or) department with prior intimation well in advance.

There are 2 types of Audits:

1. INTERNAL AUDIT

2. EXTRNAL AUDIT

INTERNAL AUDIT: If at all the Audit is conducted by the internal resources of the company, than the Audit is known as Internal Audit.

EXTERNAL AUDIT: If at all the Audit is conducted by the external people, than that Audit is known as External Audit.

AUDITING: To audit Testing Process, Quality people conduct three types of Measurements & Metrics.